We use cookies to improve your experience on our site.

AI Vulnerability Database

An open-source, extensible knowledge base of AI failures

Mission

AI Vulnerability Database (AVID) is an open-source knowledge base of failure modes for Artificial Intelligence (AI) models, datasets, and systems. The goals of AVID are to- Build out a functional taxonomy of potential AI harms across the coordinates of security, ethics, and performance

- House full-fidelity information (metadata, harm metrics, measurements, benchmarks, and mitigation techniques if any) on evaluation use cases of a harm (sub)category

- Evaluate systems, models, and datasets for specific harms and persist the structured results into a single source of truth.

What We Do

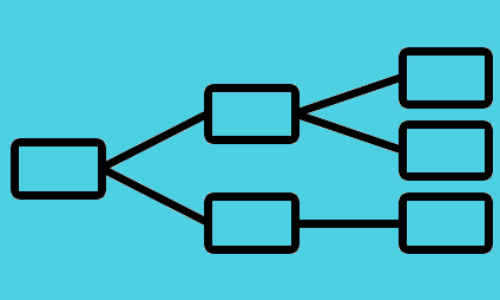

Our efforts have two focus areas: a Taxonomy of the different avenues through which an AI system can fail, and a Database of evaluation examples that contain structured information on individual instances of these failure (sub)categories.We also periodically release blog posts covering ongoing trends in AI risk management.